The Single-Model Trap: Why Production AI Is Going Compound

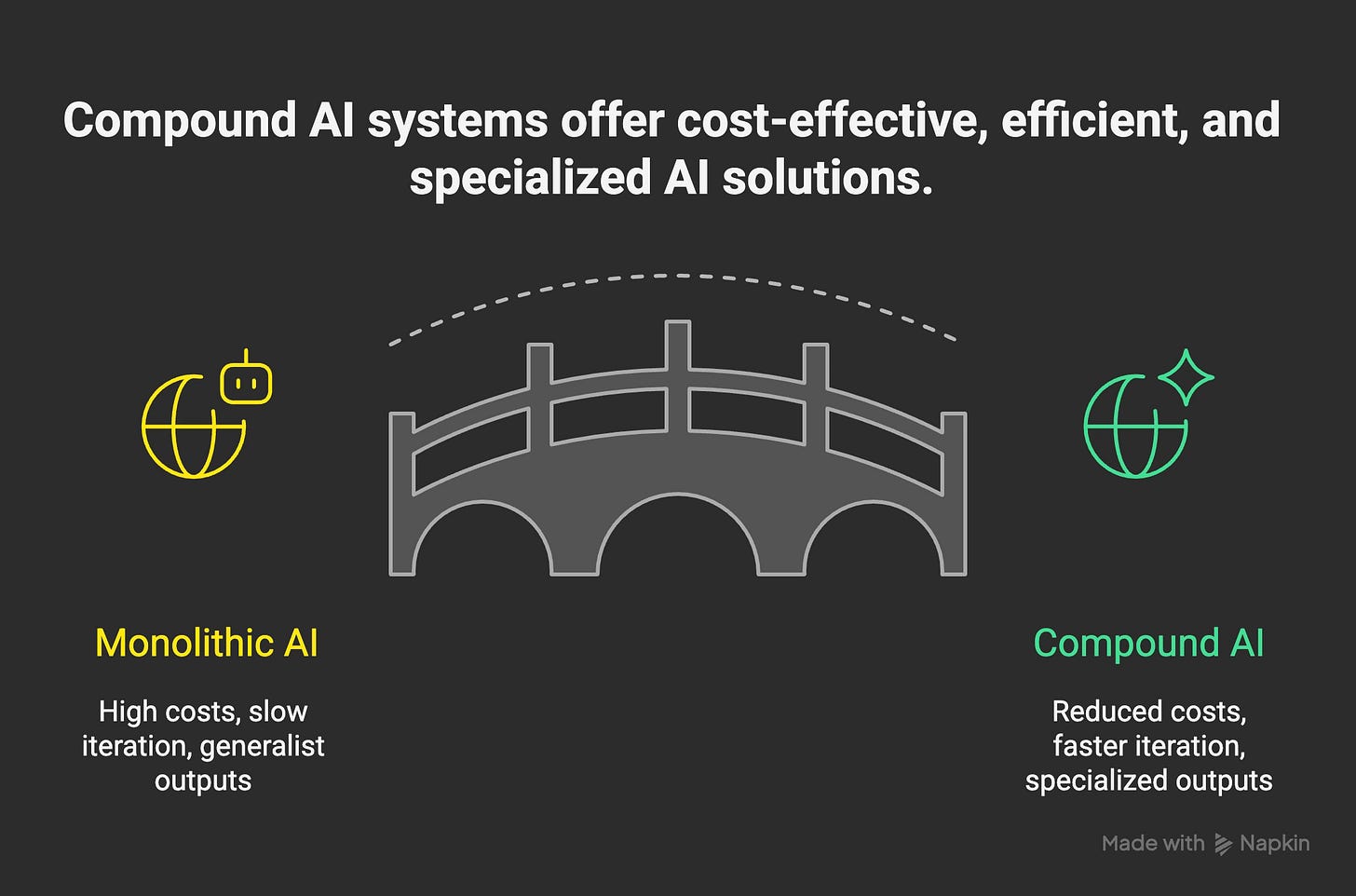

The quiet architectural shift that's letting 3-person teams outship 50-person teams—and why your next AI system should orchestrate, not maximize.

Here’s what nobody tells you about building production AI in 2025:

The most powerful AI systems aren’t using the biggest models.

They’re using the right combination of models.

While everyone’s debating GPT-5 vs. Claude 4, production teams at Databricks, Google, and OpenAI have quietly moved to compound AI systems—architectures that orchestrate multiple specialized models instead of relying on one monolithic giant.

The results? 60% cost reduction. Faster iteration. Better outputs.

And most engineers are still building the old way.

The Monolithic Model Myth

For the past three years, the industry sold us a simple story: bigger models solve harder problems.

Need better outputs? Use GPT-4 instead of GPT-3.5.

Still not good enough? Wait for GPT-5.

Want to beat that? Fine-tune an even larger model.

This made sense in 2022. It’s actively costing you money and velocity in 2025.

Here’s why: Single large models are generalists trying to be specialists. They’re expensive to run, slow to iterate on, and optimized for breadth—not depth.

When you route every task through GPT-5 (whether it’s classifying a support ticket or writing a technical spec), you’re paying sports car prices for grocery runs.

What Compound AI Systems Actually Are

A compound AI system breaks complex tasks into components, each handled by the model (or tool) best suited for that specific job.

Instead of one model doing everything:

A retrieval model finds relevant context from your knowledge base

A reasoning model (like GPT-4) plans the approach

A specialized small model handles domain-specific tasks

A verification model checks output quality

Traditional code and APIs fill the gaps

Think of it like building software: you don’t write everything in one massive function. You compose smaller, focused modules.

Same principle. Different abstraction layer.

Why This Matters Now

Three years ago at Microsoft, if we wanted to add a feature to a product used by 300 million people, we’d spin up a dedicated team, allocate serious infrastructure, and plan a multi-quarter rollout.

Today, small teams using compound AI systems are shipping comparable functionality in weeks.

The difference isn’t smarter people or better models. It’s better architecture.

The Production Data

Companies using compound AI systems in 2025 are reporting:

Cost efficiency: 40-60% reduction in inference costs by routing simple tasks to smaller, cheaper models and reserving GPT-5/Claude for complex reasoning.

Faster iteration: When you need to improve accuracy on a specific task (say, extracting structured data from invoices), you can swap or fine-tune just that component instead of retraining your entire system.

Better outputs: Specialized models optimized for narrow tasks consistently outperform generalist models on those same tasks—often dramatically.

Easier debugging: When something breaks, you can isolate which component failed instead of debugging a black-box monolith.

Real-World Architecture Example

Let’s say you’re building an AI system to handle customer support tickets.

The monolithic approach (2022-2024):

Route every ticket → GPT-4

Let it classify, respond, and escalate

Cost: $0.03+ per ticket, 3-8 seconds latency

When it fails, you tune prompts and pray

The compound approach (2025):

1. Fast classifier (small fine-tuned model): Categorizes ticket type in 100ms, costs $0.0001

2. Decision router: Based on category, routes to appropriate handler

Simple FAQs → Template responses (no LLM needed)

Standard issues → Specialized 7B model fine-tuned on your docs ($0.001 per response)

Complex issues → GPT-5 with retrieved context ($0.03 per response)

3. Verification layer: Checks sentiment and completeness before sending

4. Escalation system: Routes edge cases to humans with full context

Result: Average cost drops from $0.03 to $0.005 per ticket. Response quality improves because each component does what it’s optimized for.

That’s an 83% cost reduction while improving output.

When Compound Beats Monolithic: The 3-Question Framework

Not every AI system needs this complexity. Here’s how to decide:

Question 1: Do you have distinct task types with different complexity levels?

YES → Compound (route based on complexity)

NO → Monolithic might be fine

Example: Customer support has simple FAQs and complex technical issues. Compound wins.

Question 2: Are costs or latency constraints forcing trade-offs?

YES → Compound (optimize cost/speed per task)

NO → Monolithic is simpler to start

Example: You’re processing 100K+ requests daily. Every millisecond and cent matters. Compound wins.

Question 3: Do you need specialized domain knowledge in specific areas?

YES → Compound (fine-tune specialists)

NO → Monolithic generalist works

Example: You need medical coding accuracy for healthcare records AND natural conversation for intake. Different models excel at each. Compound wins.

If you answered YES to 2+ questions, you should be building compound.

The Orchestration Layer: Where the Magic Happens

The hard part isn’t picking models—it’s orchestrating them.

You need:

Routing logic that decides which model(s) to use based on input characteristics

State management to pass context between components

Fallback strategies when a component fails

Monitoring to track performance at the component level

Version control for each component independently

This is where frameworks like LangGraph, CrewAI, and Microsoft Semantic Kernel come in. They handle the plumbing so you can focus on the architecture.

At my first startup, we scaled to $500K with 15 engineers. Today, you could hit that with 2 engineers using compound AI systems. But only if you architect correctly.

The Hard Truth About Complexity

Compound AI systems are more complex than calling one API.

You’re trading simplicity for:

Control over cost structure

Flexibility to iterate components

Better performance on specialized tasks

Lower vendor lock-in

This is the same trade-off we make everywhere in software: monolith vs. microservices, single database vs. specialized datastores.

The question isn’t whether compound is “better.” It’s whether the benefits justify the complexity for your specific use case.

For prototypes and MVPs? Start simple. One model. Get to market.

For production systems at scale? Compound is increasingly becoming the only economically viable path.

What This Means for Your Career

The engineers winning in 2025 aren’t the ones who know every model’s capabilities.

They’re the ones who know how to compose systems.

This requires thinking like a systems architect, not a prompt engineer:

Understanding latency budgets and cost structures

Designing robust error handling and fallbacks

Monitoring and optimizing pipelines, not just prompts

Making build-vs-buy decisions at the component level

If you’ve built distributed systems, microservices, or data pipelines, you already have 80% of the skills needed. AI models are just another type of component.

The reality check:

In 2025, building production AI is less about waiting for better models and more about architecting better systems.

The teams shipping fastest and most cost-effectively aren’t using secret models. They’re using compound architectures that compose the models everyone has access to.

Single models will keep getting better. But compound systems will keep getting cheaper and faster to iterate.

The question isn’t whether to make this shift. It’s whether you’ll make it before your competitors do.

I want to hear from you: Are you currently using compound AI systems in production? What’s been your biggest challenge? Hit reply and let me know—I read every response.

And if this clicked for you, forward it to your technical co-founder or engineering lead. They’re probably still paying GPT-5 prices for everything.

— Ishmeet

P.S. Building something with compound AI? I’m documenting my journey building agentic systems in stealth. Connect with me on LinkedIn for real-time updates and lessons learned.

This piece really made me think, what if the overhead of orchestrating so many micro-models eventually becomes the new bottleneck?