The Agentic Mindset Shift: Thinking in Goals, Not Code

If you can’t let go of control, you’ll never build a useful agent.

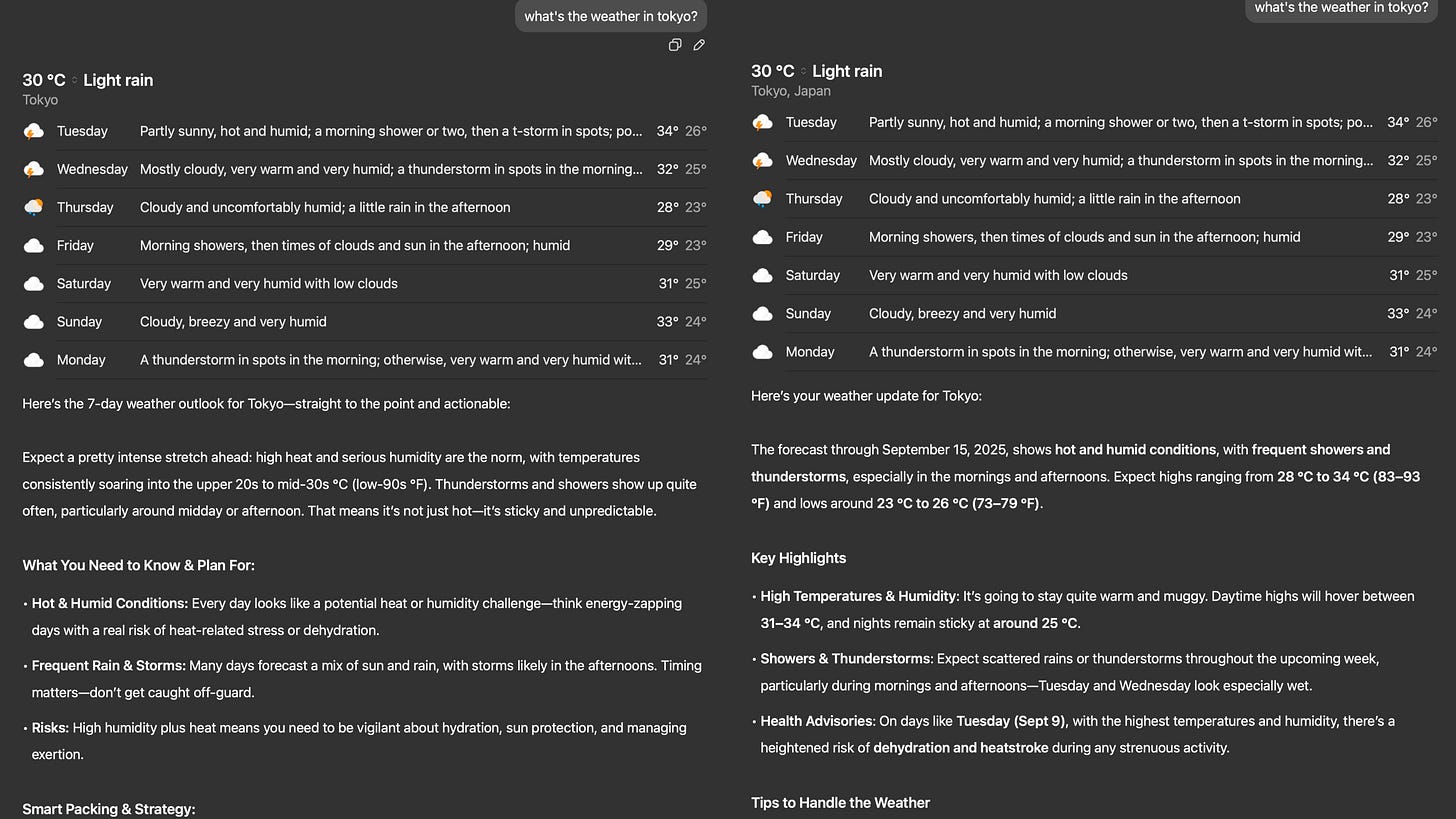

To prepare for my upcoming trip to Japan, I asked ChatGPT about the weather in Tokyo. When I repeated the same question in a new chat, I got two different responses. The facts were the same, but the style and framing shifted.

Not a big deal in casual conversation. But this kind of indeterminism is exactly what makes building AI Agents so challenging.

Coming from a decade of software engineering, I had to unlearn old instincts. Traditional systems are predictable and rule-bound: same input, same output. As a developer, you control the execution path and the type of output—string, integer, float, or object.

With LLMs and Agents, that model breaks down. Same input, same model, different outputs. Context, randomness, and adaptive reasoning all shape the response. The system explores multiple possible paths instead of just one.

This article will help you reframe the mental model to adapt towards Agentic Engineering. Think in Goals, Not Code.

Deterministic vs. Indeterministic Thinking

Deterministic systems are predictable, rule-bound and provide fixed outputs for the same input. Indeterministic or Probabilistic systems explore multiple possible paths, influenced by context, randomness, or adaptive reasoning.

For agents to work in our favour, there should be a balance of both. In my previous article, I talked about how the autonomy is an important trait for Agents. Indeterminism gives this autonomy to agents so they can explore multiple possible paths given the context and environment. However, they should still convert on useful outcomes.

Agents need autonomy to plan/execute actions toward goals.

- OpenAI

What is Agency?

In Agentic AI, agency means shifting from “I tell you every step” to “I tell you the destination, you figure out the path.” At its core, agency means the capacity to act autonomously toward goals rather than just execute fixed instructions. In the context of AI:

Traditional software = scripted determinism. You hard-code every rule and pathway. The system only does what you explicitly tell it.

Agentic AI = goal-directed autonomy. You specify the goal, and the system reasons, plans, and chooses actions to reach it.

Keeping Agency Safe

The agentic flexibility enables adaption to new information, creativity in problem solving and emergent behaviours like tool-use or collaboration. It is extremely important to keep it in check to avoid the “runaway” behaviour like hallucinations, loops or irrelevant outputs. Debugging becomes harder.

Practical Framework: Combining Both Worlds

Goal-Oriented Design: Define what success looks like, not every step.

Scaffolding: Provide deterministic structures (APIs, tools, workflows).

Exploration: Let agents fill gaps with indeterministic reasoning and exploration.

Guardrails: Add checkpoints to ensure outcomes are relevant and safe.

The Shift in Thinking

Instead of asking “What steps should the agent follow?”, try asking “What goal do I want, and what guardrails keep it safe?”. Instead of finding the availability to schedule a meeting, design an agent that ensures:

The meeting is scheduled within your availability (Guardrails)

With the right people (Goal)

On the platform of your choice (Scaffolding)

Building agents means relinquishing micro-control. Your job isn’t to script every move but to design environments where agents can act effectively toward goals. This mindset shift is the difference between workflows branded as “agents” and truly agentic systems.

About This Newsletter

Here, I’ll share insights on how engineers, startup builders, and technical leaders can make the transition into an agentic world—where software doesn’t just respond, it acts.

Ready to take the first step toward becoming agentic? Let’s connect and design a plan that fits your journey.