The Reasoning vs. Speed Paradox: When Slow AI Actually Ships Faster

Why production teams are choosing 10x-more-expensive reasoning models over instant inference—and the 3-question framework that tells you which path actually accelerates your deployment timeline.

If you’re shipping AI into production in 2025, you’ve probably asked some version of this question:

“Why would we pay 10x more for a model that’s 5–10x slower?”

On paper, the choice seems obvious: take the fast, cheap model. Sub-second latency, lower bill, easier to justify in a sprint review.

But here’s how that decision often plays out in real teams:

You pick GPT-5-mini for a critical workflow because “it’s 10x cheaper and way faster.” Three weeks later, you’re drowning in error correction, manual reviews, and edge cases the fast model keeps missing.

Meanwhile, another team that chose OpenAI’s GPT-5-Pro — even though it’s 10x more expensive and 5–10x slower per request — ships their production system in half the time. Their advantage isn’t magic. It’s that they understand a simple truth most engineers miss:

In production, the speed that matters isn’t inference latency. It’s time to reliable output.

This isn’t theory. It’s what’s playing out right now across engineering teams navigating 2025’s biggest AI architecture decision.

The Two Paths Diverging in Production

Fast Inference Models (GPT-5-mini, Claude Haiku 4.5, Gemini-2.5 Flash):

Sub-second response times

Optimized for throughput and cost

Excel at pattern recognition and straightforward tasks

Cost: $0.15–$2.50 per million input tokens

Reasoning Models (OpenAI 5 Pro, Claude Sonnet/Opus 4.5, Gemini 3 Pro):

5-30 second response times (sometimes minutes for complex tasks)

Use chain-of-thought reasoning before answering

Break problems into logical steps and self-correct

Cost: $15–$120 per million I/O tokens

On paper, fast inference wins every time. 10x cheaper, 10x faster—obvious choice, right?

Wrong.

When “Slow” AI Ships Faster: The Real Numbers

Fast inference models:

Save developers 3.6 hours per week on average

But require significant error correction time

Quality improvements remain “mixed” across implementations

Reasoning models:

Save senior engineers up to 4.4 hours per week

Reduced error rates by 40-75% in complex tasks

Onboarding time cut nearly in half

Microsoft’s data shows reasoning models now generate accurate code in domains where fast models require 3-5 revision cycles. The “slower” model shipped faster because it got it right the first time.

At my startup, we validated this ourselves: Claude 4.5 (Cursor, Thinking, Max Mode) costs us 10x more per request than GPT-5.1-Codex-mini and 4.5x more per request than Haiku-4.5. But it eliminates the back-and-forth that was eating 6-8 hours per week in debugging and refinement.

Total cost of ownership? Lower with reasoning models. Time to production? Faster.

The Framework: 3 Questions That Reveal Your Path

After analyzing production implementations across financial services, healthcare, and our own stealth build, here’s the decision framework that actually works:

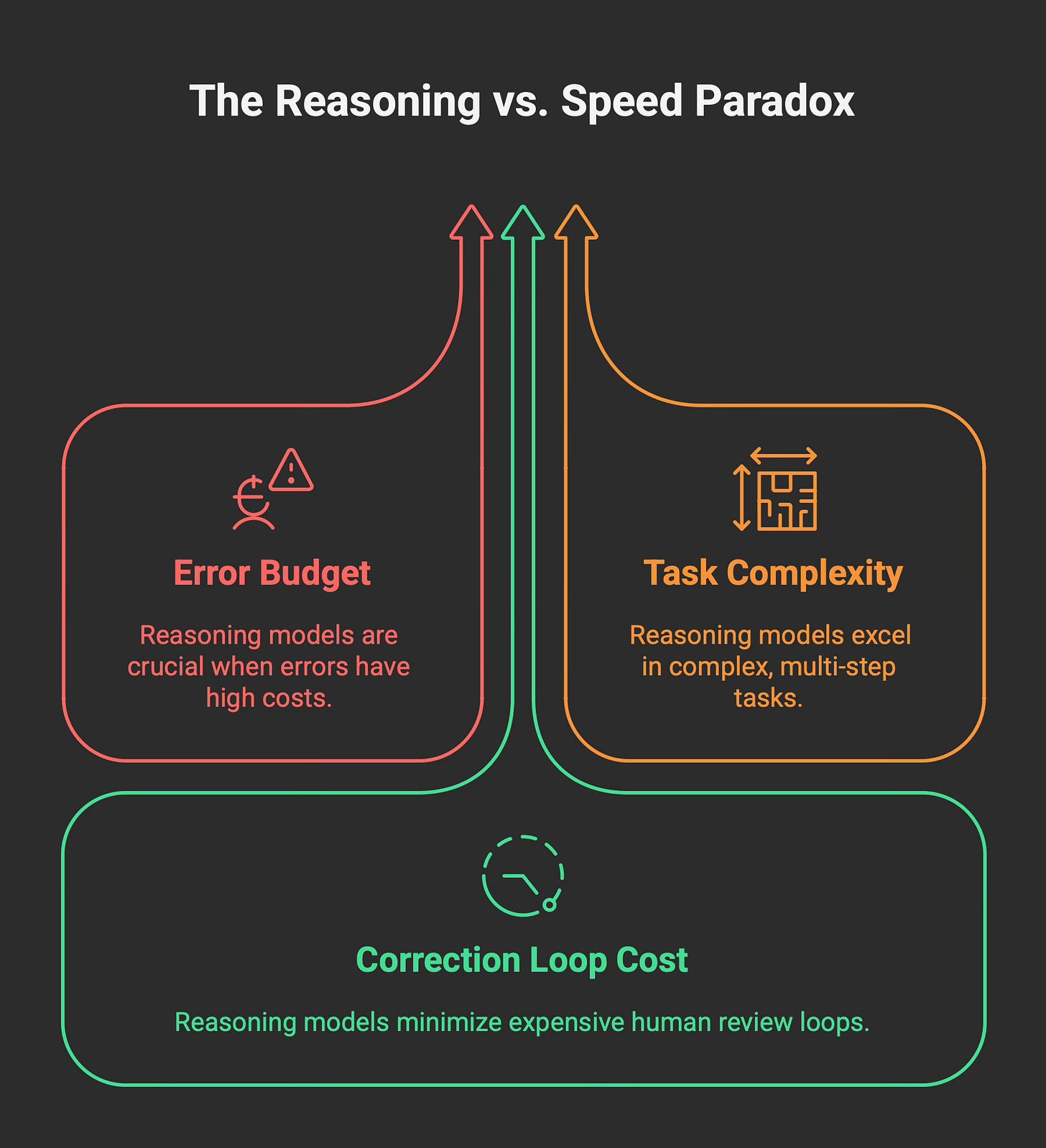

Question 1: What’s Your Error Budget?

Use Fast Inference if:

Errors are acceptable or easily recoverable

You have human review already built in

Mistakes don’t cascade or compound

Example: Content recommendations, draft generation, brainstorming

Use Reasoning Models if:

Errors have regulatory, financial, or safety consequences

Manual correction costs exceed compute costs

Mistakes create downstream technical debt

Example: Financial compliance, medical diagnostics, code review for production systems, legal document analysis

Question 2: Is This Multi-Step Logic or Pattern Matching?

Use Fast Inference if:

Task is single-step or straightforward classification

Historical patterns strongly predict outcomes

Speed/throughput is the primary KPI

Example: Image classification, sentiment analysis, simple Q&A, data extraction

Use Reasoning Models if:

Task requires breaking down a problem into logical steps

Context and nuance change the answer significantly

You need explainable decisions (for compliance or debugging)

Example: Complex code refactoring, multi-constraint optimization, debugging distributed systems, strategic planning

Question 3: What’s the Correction Loop Cost?

Use Fast Inference if:

Correction is automated or cheap

High volume justifies some failures

Output is intermediate, not final

Example: First-pass code suggestions, search result ranking, A/B test generation

Use Reasoning Models if:

Human review is expensive (engineering time, specialist time)

Each error triggers manual investigation

Failures block deployment or require rollback

Example: Database migration scripts, infrastructure-as-code, security patches, customer-facing automation

The Hybrid Architecture Pattern

The teams shipping fastest in November 2025 aren’t choosing one or the other. They’re architecting hybrid systems:

Layer 1 (Fast Inference):

Initial screening and routing

High-volume, low-risk decisions

User-facing responsiveness

Layer 2 (Reasoning Models):

Complex cases escalated from Layer 1

Final validation before critical actions

Tasks where explanation is required

The Implementation Checklist

Before you choose, measure these in your specific context:

For Fast Inference:

Run 100 production-like test cases

Measure: accuracy, edge case handling, consistency

Calculate: error correction time × error rate

If (correction cost) < (reasoning premium), use fast inference

For Reasoning Models:

Test on your 10 hardest production cases

Measure: first-pass accuracy, explanation quality, self-correction

Calculate: (avoided errors × correction cost) - (reasoning premium)

If positive, reasoning justifies the cost

Hybrid System Design:

Categorize your workflows by complexity and stakes

Route simple/low-risk → fast inference

Route complex/high-risk → reasoning models

Monitor and adjust routing rules based on real accuracy data

The pattern is clear: Fast inference for volume, reasoning for value.

The Counterintuitive Truth

At Microsoft, we learned that scale isn’t about doing more faster—it’s about doing the right things reliably. The same lesson applies to AI model selection.

Slow reasoning models often ship faster than fast inference models. Not in spite of being slower, but because they eliminate the iteration tax.

Your $50K decision isn’t really about reasoning vs. speed. It’s about understanding what “fast” actually means in production.

Sometimes, the fastest path to done is taking a few extra seconds to think.

Building with reasoning models in production? Hit reply and tell me—what’s the hardest part of the speed vs. accuracy trade-off in your domain? I read and respond to every reply.

If this framework helped you think differently about your AI architecture, forward it to your tech lead. They’re probably making this decision right now.

– Ishmeet.

I’m documenting my journey building agentic systems in stealth. Connect with me on LinkedIn for real-time updates and lessons learned.