Agent Washing: Why Not All ‘AI Agents’ Are Created Equal

Semantic Inflation: Why Everything Is Now Called an “AI Agent”

Two weeks ago, Grammarly announced a suite of “AI agents” designed to help with everyday writing tasks—things like finding citations, paraphrasing text, or polishing tone. All of these agents probably add immense value to user workflows. But are they actually Agents? Let’s find out.

The “Agent” branding today sits at an intersection: somewhere between the strongest wave of marketing momentum and a technological leap that has reshaped how we perceive interfaces and software. True agentic systems exhibit enough agency to reason, plan, and act toward a goal. The last piece—the goal—is the most important.

What is an Agent?

While there are hundreds of definitions for Agents, here are what some of the first movers have to say:

OpenAI: Agents are systems that independently accomplish tasks on your behalf.

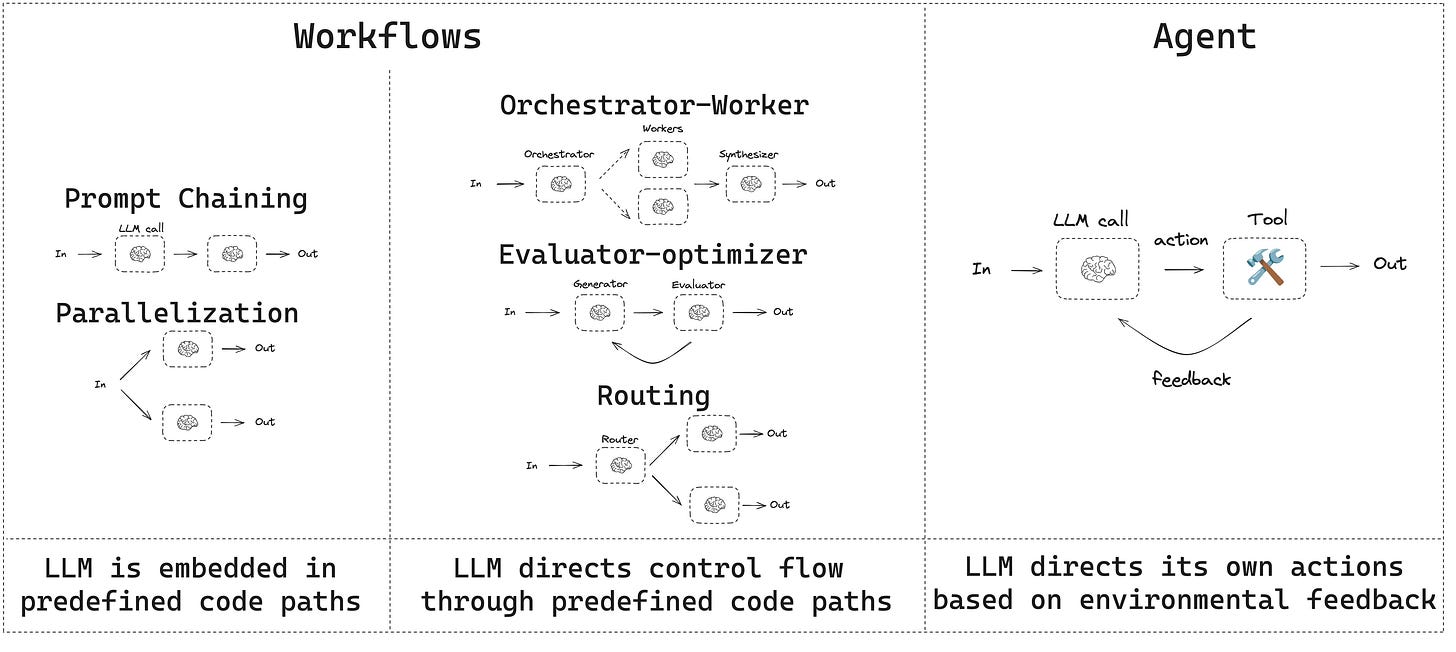

Langchain: An AI agent is a system that uses an LLM to decide the control flow of an application

While OpenAI’s definition is very generic, Anthropic has taken a more definitive stance including an important trait of autonomy. LangChain, from a technical standpoint, adds clarity on how autonomy manifests—the control flow. On the other hand, Google’s definition feels contradictory: an AI system should not “pursue goals” but rather work toward them. Completing tasks can be interpreted broadly. ChatGPT can search the internet (a task) for a user, but is that truly agentic? Gemini can write code (a task), but does that qualify as agentic?

For the purposes of this article, I’ll stick with the following working definition:

An AI Agent is a system that uses LLM to decide the control flow of an application, leveraging tools and autonomy to accomplish the given goal.

This combines Anthropic’s focus on autonomy with LangChain’s emphasis on control flow—giving us a more complete picture of what Agents really are.

Agentic Traits

Now that we’ve defined an Agent, what traits should it have? Fortunately, there’s broad alignment here:

Autonomy: Makes decisions (vs follows rules).

Goal‑driven: Acts toward an objective, potentially through multiple steps or tools.

Reasoning & Planning: Adapts mid-stream (not linear scripting).

Tool & Memory-Oriented: Uses APIs, stores context, plans actions.

Dynamic Control Flow: Chooses next action based on outcomes.

Guardrails / Safety: Applies checks, fails safely, escalates when needed.

Some Real Agentic Systems

OpenAI’s ChatGPT Agent: can autonomously handle multi-step real‑world tasks via virtual computer, with safety checks and autonomy

Microsoft Copilot + Azure SRE Agent: elevated from pair-programmer to peer that autonomously addresses site reliability issues; daily agent usage has more than doubled year‑over‑year

Coding Agents (Cursor, Windsurf): extend beyond autocomplete by reasoning about intent, making multi-step code edits and refactoring projects.

The Marketing Spin

According to a partner at a16z, some startups wrangle the “AI agent” label to command higher pricing for what are essentially chat interfaces over knowledge bases. When you label routine tasks or prompt-based workflows as “agents” with minimal decision making, you risk diluting its meaning, and potentially its perceived value. It works in favour of the companies so they can demand higher prices while there is a minimal knowledge in the leadership and decision-making roles.

How you can avoid Agent Washing?

For product leaders & technologists:

Be cautious of over-branding: demand substance, autonomy, planning capabilities.

Avoid hype traps: Differentiate “agentic” from “branded convenience.”

Invest real agent design: with orchestration, guardrails, evaluation layers.

Set expectations: Agents are powerful, but concerns remain—oversight, hallucinations, safety, and liability.

If you’re looking for a more detailed breakdown, I’ve created a free checklist that helps identify whether a system is truly agentic. Click here to download.

Closing Thoughts

Semantic inflation of “AI Agent” is more than marketing fluff—it dilutes meaning and can mislead decision-makers. Whether Grammarly fits the criteria for an agentic system, I’ll leave for you to decide.

True agentic systems offer autonomy, adaptability, and real utility—but require careful architecture and design. Use the insights (and the checklist) to push back against jargon—and to design agents that are genuinely capable.

About This Newsletter

Here, I’ll share insights on how engineers, startup builders, and technical leaders can make the transition into an agentic world—where software doesn’t just respond, it acts.

Ready to take the first step toward becoming agentic? Let’s connect and design a plan that fits your journey.